Swift Student Challenge 2025

Pretty late to the party but this is my project for the Swift Student Challenge 2025. Please pretend I posted this in February.

The Idea

When I went to a Vision Pro Developer lab, I felt that it was kinda difficult to type, because I was playing 'don't look at the delete everything button'. So since then I have been thinking about other ways to type. One idea that I liked was typing on your hand, a bit like in the Lorm Alphabet. ALSO: I am neither blind nor deaf. I do not speak Sign Language. And I do not currently know any people that are either or both. This next paragraph is based on my internet research, and I hope I worded it correctly.

The Lorm Alphabet

The Lorm alphabet is a tool used by people who are both blind and deaf, but grew up learning a language that is not sign language. Basically, you take the hand of the person you want to talk to, and type the words you want to say in their hand. Lorm is mainly used in the German speaking area (and the Netherlands and Switzerland as well as the Czech Republic). Originally, it was invented by Heinrich Landesmann who was a Austrian poet and writer who became deaf at 16 and later became blind as well. To communicate with his wife and daughter they invented the Lorm alphabet.

Why is it named Lorm then?

He went by the pen name Hieronymous Lorm.

Why mostly people who grew up with a language other than sign language?

Lorm is not a Language, Lorm is a tool in the same way a keyboard is a tool, therefore you already need to know a language that works with typing letters. Sign Language has a different grammar and structure than spoken/written language (apparently it's similar to French? I know neither so I couldn't confirm).

There is also a tactile version of sign language (called 'Hand-over-Hand' or 'Hand-on-Signing'), where the 'listener' feels the shape of the hands (the signs) the other person is making. This can be more intuitive than Lorm, but it depends on which language you preferred when learning them. Lorm is easy to learn for typing, but actually more difficult to understand and requires some focus and practice. (I linked some resources, so you can try it yourself.) By the way, there are multiple 'versions' of tactile alphabets based on typing on some else's hand, there is for instance Malossi (used in Italy mainly and tapping or double tapping joints) or Niessen (a lot like Lorm, but on the back of the hand, not the inside of it). There is also Tactile Fingerspelling, which is feeling the finger alphabet and just as fast for communication as Lorm. There are many more, so you should read up on them!

The Project

Now that I feel I've already talked for an hour, I can start telling you about my project. I've been using the new Vision Framework.

Important note, 'Vision' as in 'Computer Vision', not 'VisionOS/Vision Pro'

The vision Framework has been around for quite some time. But last year at WWDC24 Apple announced that there will be a new version. The gist is that you now remove the 'VN' prefix to get the new native framework and not the now old legacy framework. (Maybe I will write about this in a bit more detail later?)

Start and MVP

I started with the Hand Drawing Sample Project. In that, we detect where the thumb and index finger of the most prominent hand are and show those two points. If they touch we will draw some lines. As a second 'opinion' I looked at the new OCR Sample Project. That is made using the new framework and aiming to detect text in a picture and place some rectangles in the place of the text on the picture.

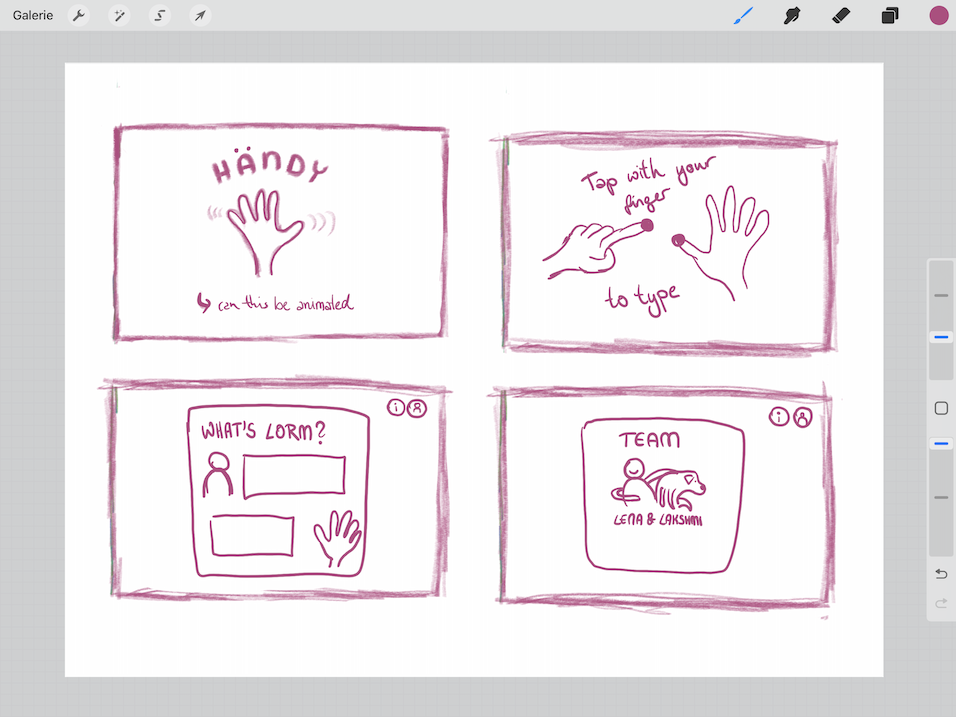

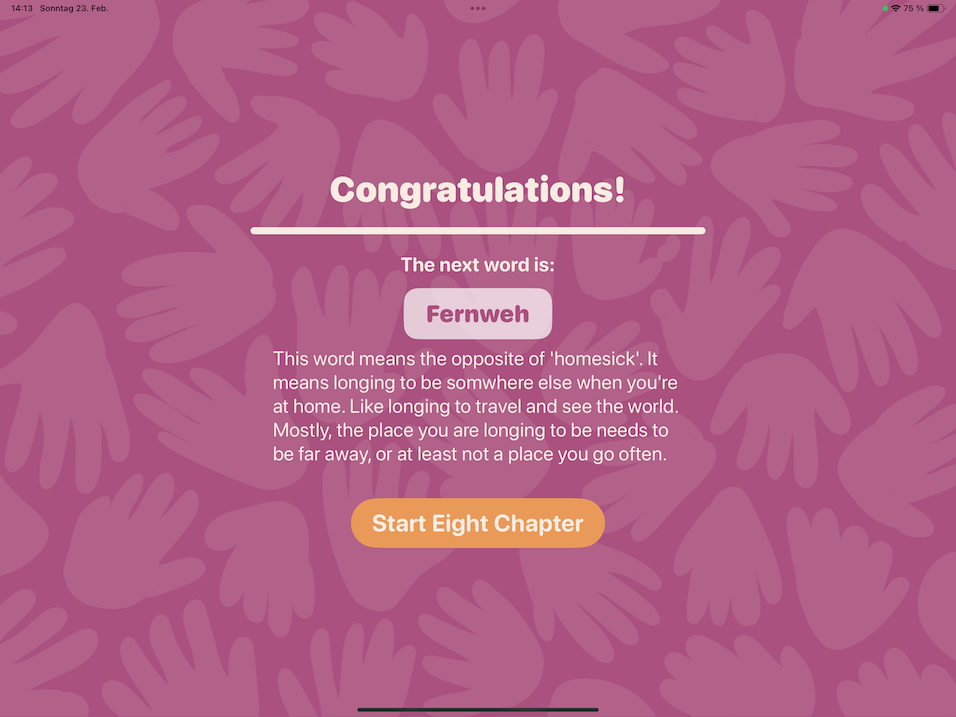

By starting with the samples I was able to quickly have a working prototype. I then started drawing out what I actually want to do with the project. I thought it might be fun to have the user type out some words that are important in my life. The title of my favourite TV show, my favourite English word, such things.

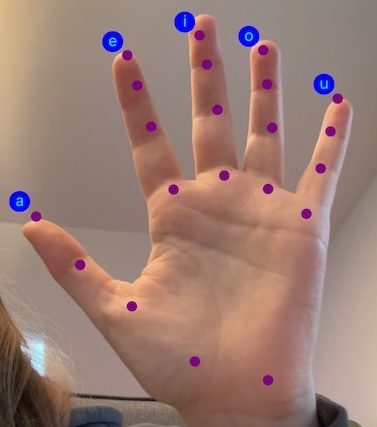

Typing

I spent quite a lot of time on the typing. Lorm also features some swiping gestures and some pinching. As I am using Vision and can only detect touch points ion a 2D space, I decided to go with just tapping your joints. At first that was quite cumbersome, it was very difficult to type and I hadn't had a good layout. Then I decided to change to a layout based on how often the letters appear in the German and English written language, that made typing a lot easier already. There was also briefly the ability to double tap to work with some shortcuts like using the C to get to a K as the sounds are very similar. But I managed to forget all the shortcuts near immediately, so I removed that option again. It would also lead to a lot of wrongly typed letters.

Constraints

Lastly I wanted to make the app a bit easier to learn, so I decided to remove the ability to type wong letters. These now get removed after two seconds. This way typing is a lot faster and more comfortable. I felt that it is okay to add this limitation, because this is more of a prove of concept, rather than an actual keyboard. There is another constraint I am working with: because this is a Student Challenge project, this has to work on the iPad, and not on the Apple Vision Pro. I feel that on a Vision Pro the concept would work better, because you would be looking down at your hands. There is also a lot more 3D information available on the Vision Pro. And ARKit is able to recognise some more points. I think it would be interesting to see how it would work on a Vision Pro, maybe even use some machine learning to try and incorporate gestures.

Personality

On the side you can see some screenshots of what the app looks like. I made a lot of textures and tried to use warm colours and a rounded font to give the app a lot of 'personality'. The textures were all drawn in Procreate, you can also see some of them on the side. For texture with the keys, I took some pictures of my keyboard and tried to layer them and add some loose key caps. I have a half filled version, and outlined version and a fully filled version. (There are also multiple versions of the hands pattern.)

I also included some videos here of what you can do. You can rotate your hands to reach points easier, and you can also switch hands. I am left handed, so it's easiest for me to type on my right hand, but some might prefer doing that the other way round. As long as one hand is shown to the camera fully, that will be the base hand (where the letters appear). You can also switch hands in the middle of typing, if that's what you like! The video is at the speed it took me to type the words. There aren't enough joints on the hand that I could fit all letters of the german alphabet in there, so there is a switch to another layout. When touching your wrist, the keyboard will switch between the two layouts. The first layout is most common letters and the second one is less common ones.

All in all, I had a lot of fun working on this project. The new Vision Framework is delightful!